AI's Impact on Blockchain and Decentralized Digital Frontiers

What does this ChatGPT think?

I asked ChatGPT-4 how it thought it would impact the blockchain and web3 landscape, and this was its response:

ChatGPT has the potential to significantly impact the web3 and blockchain landscape in several ways:1. Enhanced user experience: ChatGPT could make it easier for users to interact with decentralized applications (dApps) and blockchain platforms by offering natural language interfaces. This would make it more accessible for non-technical users to participate in the web3 ecosystem.

2. Improved smart contract development: ChatGPT could assist developers in writing more efficient and secure smart contracts by automatically detecting potential vulnerabilities, suggesting best practices, and even generating code snippets based on developer input.

3. Decentralized content creation: ChatGPT could contribute to decentralized content creation platforms by generating high-quality text, opening up new opportunities for creators in the web3 ecosystem.

4. Community moderation and engagement: ChatGPT could be employed for moderating decentralized social networks and forums, ensuring a healthy community environment. Additionally, it can help drive user engagement by generating content, answering user queries, and providing information on-demand.

5. Decentralized AI services: By integrating ChatGPT into decentralized AI marketplaces, it could offer various AI services such as translation, summarization, and sentiment analysis. This would allow developers to access and incorporate AI-powered functionalities into their dApps more easily.

6. Personalized NFT creation: ChatGPT could help users generate unique and personalized text-based NFTs (Non-Fungible Tokens), adding a new dimension to the digital art and collectibles market.

7. Education and onboarding: ChatGPT can be utilized to educate newcomers about blockchain technology, cryptocurrencies, and web3 applications, making it easier for them to understand and navigate the space.

Overall, ChatGPT has the potential to contribute significantly to the web3 and blockchain landscape, making it more accessible, efficient, and user-friendly. However, it's essential to address concerns related to data privacy, security, and the ethical use of AI to ensure responsible deployment of such technologies in the decentralized world.

This week I'm going to provide my thoughts on these, but also add my own perspectives items too.

Enhanced user experience

Blockchain and web3 applications still suffer from inadequate UX for their users. Even long-term, experienced users of the technology still struggle at times. For instance, a couple of weeks ago I was verifying my hardware wallet key recovery where I had to input seed phrases into a ledger.

I know of few things more tedious than this where you're using two buttons on a tiny interface to enter letters to spell out sequences of 24 words.

Where large language models (LLMs) such as ChatGPT can really shine is in helping users interpret on-chain data and advise them on how to go about certain tasks. Or better yet, do them entirely for that user.

However, trust could be challenging here. If you're relying on an AI agent to assist you, could you ever trust it enough to look after very sensitive data such as your private keys? Without some sort of failsafe provided by the creators of AI-based agents, such simplifications will be challenging.

Improved smart contract development

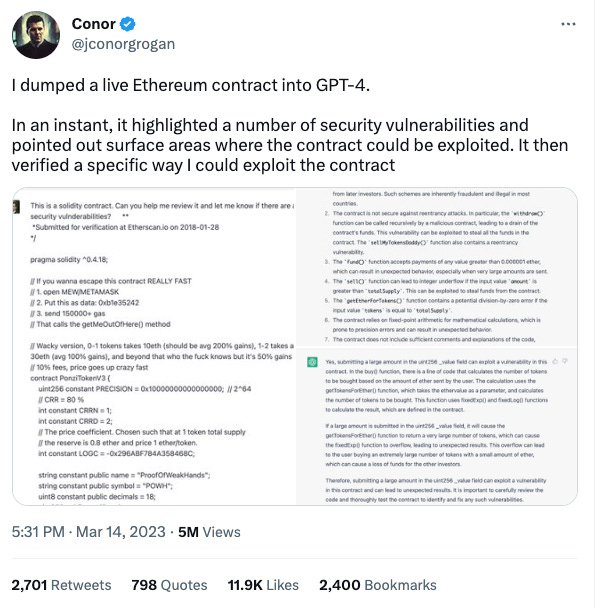

It's already been publicised the ability of GPT-4 to identify vulnerabilities in smart contracts. In addition, developers generally are being encouraged to use GPT via GitHub Copilot which can generate code based simply on comments outlining the intent.

This growth in the adoption of coding agents will inevitably impact smart contract development generally. The issue with this is that the agent is only as smart as the data it's been trained on. Currently, GPT-4 uses a data set that went until 21st September 2021, so it’s possible that it will not be across more recent vulnerabilities identified after this point.

No doubt with future iterations of GPT the training data will become increasingly up to date and ultimately close to real time. However, this lag is not acceptable when taking into account security vulnerabilities, as access to current data to prevent the latest exploits is key.

The other aspect of this is with respect to the quality of the code that it’s being trained on and hence writing. In recent years developers have relied heavily on StackOverflow and GitHub for much of their development, which entails a lot of copy and pasting code (copypasta).

The issue with this approach to development is that the person using the pre-written code may not fully understand it, and therefore it could introduce bugs or behaviour that is undesirable.

It's impossible to write entirely bug-free code, and by allowing developers to easily sidestep the understanding which comes with writing it themselves, there is a risk of undesirable outcomes with it. ChatGPT will accelerate the amount of code out there that falls into this category.

This isn't to say that it does a bad job with its suggested code, merely that an increasingly larger number of people will be able to produce code underpinning many products and services without necessarily understanding how it works.

It’s not helped that the AI researchers themselves producing GPT and other models don't fully understand all parts of the workings of these AI models, and there are bound to be some significant consequences as a result of this.

Decentralized content creation

We've all seen the ability of GPT-4 with respect to generating content, it seems likely that it will contribute here. Letting ChatGPT endlessly generate articles would be counterproductive, but as with any content creation it is certainly good at assisting in the following:

Editing content for a specific audience

Helping fill out articles from an outline

Summarising articles

Suggesting topics for discussion (as its being used here)

The web3 and blockchain communities could be beneficiaries of this if the content is created with the assistance of LLMs and is able to bring new users into the space.

Although this point can apply to any topic or industry, there's not really anything specific to web3 here, other than the platforms on which this content is created could be decentralised.

Community moderation and engagement

Chatbots are already a cornerstone of our online lives. GPT and other LLMs they're only going to become more widespread and sophisticated. At least for the time being one could weed them out by asking them about current world events, but once they have access to real-time data this will change.

For commonly asked questions or engaging with web3 community members via platforms such as Discord or Telegram, they could provide a human, albeit artificial touch when they converse with new members, helping them feel part of the community.

Content moderation would also be helpful, especially if LLMs can identify scams — this is something that web3 is still plagued by. Many of them make significant use of social engineering tactics, so it would be especially beneficial to communities if LLMs could have an impact here.

Decentralized AI services

ChatGPT in its response appears to be focusing on AI marketplaces as a category. However, focusing instead on decentralised AI services is both a compelling and scary thought.

There are teams working to decentralise many established internet services, be that social media networks, content creation, cloud storage, finance and many others. It's natural that teams would want to provide decentralised AI agents that follow a similar playbook where they're not controlled by some large corporation.

However, as I wrote about in chapter 1 of my book "The Unstoppable Machine" (which incidentally just came out on audiobook), if you have an uncensorable, global decentralised network running AI agents, how do you turn them off if they start misbehaving or plotting the demise of humanity?

Moving on from the dystopian scenarios, there is a real utility for using such services as suggested to aid with translation, summarization, and sentiment analysis. These also overlap with the content creation discussed above.

Personalized NFT creation

Generative AI tools are already being integrated by the likes of Adobe into their software and tools like DALL·E allow images to be created from text descriptions.

It’s inevitable that these tools will be utilised to create NFTs too.

Education and onboarding

This really has relevance alongside UX, content creation and AI services. The combination of contributions by LLMs to each of these categories will assist with the education and onboarding of more users.

Other items

Outside of the points that were raised by ChatGPT, some of the ways in which I believe we may see LLMs influencing web3 build on some of these ideas further.

If LLMs are helping developers do a lot of their work, could they start suggesting new types of decentralised protocols? Depending on the goal of the creator if it’s to optimise certain features of existing protocols, could we get to the point where we see protocols that were designed in conjunction with AI agents?

We're likely to see AI agents emerging to respond to user queries about activities taking place on-chain. Imagine if your AI agent could aggregate data from a number of different sources to provide an explanation of the on-chain activity.

For instance, if a DeFi protocol were exploited, it could break down the cause of the exploit, then by correlating this information with other services such as Chainalysis perhaps it could identify likely attackers.

Dune Analytics recently outlined their LLM roadmap where these types of services will help get human responses to queries instead of being pointed to transaction pages on block explorers.

Given that ChatGPT doesn't know anything that has happened in web3 since September 2021, there's a lot of new information it still needs to get across, especially with respect to the latest breakthroughs in zero-knowledge rollups and major events like the Ethereum merge being completed.

I believe that one of the most significant issues facing LLMs and web3 is going to be the trust barrier. There are many ways in which they can contribute from a content perspective, but were we able to rely on AI agents to secure our data and take all of the complexities users are burdened with away, that would be huge.

But in order for this to happen you'd need to have decentralised protocols underpinning these AI services, which I don't think is likely to happen anytime soon.

In the meantime, I'm sure we'll see increasingly more developers become blockchain developers thanks to ChatGPT, and be swamped with even more content. My hope remains that this will result in the creation of more and more value for the ecosystem, but my concern is that it will simply result in more clones of what we already have than ever before.